Well thanks! ;-)

Ahh yes I meant as opposed to Twitter and Facebook. But I worded it badly 😁

Ahh nice. I know what I’ll be doing tomorrow.

(I'll add links / descriptions later)

I host the following fediverse stuff:

- Lemmy (you're looking at it)

- Mastodon (3 instances)

- Calckey oh sorry, now FireFish

- Pixelfed

- Misskey

- Writefreely

- Funkwhale

- Akkoma (2 instances)

- Peertube

And these are other things I host:

- Kimai2

- Matrix/Synapse

- Silver Bullet

- XWiki (3 instances)

- Cryptpad (2 instances)

- Gitea

- Grafana

- Hedgedoc

- Minecraft

- Nextcloud

- Nginx Proxy Manager

- Paperless-ngx

- TheLounge

- Vaultwarden

- Zabbix

- Zammad

I'm still running Synapse. Could I migrate this to Dendrite or others? Or would I have to just re-install and lose all messages..

- Powerful: Organizations & team permissions, CI integration, Code Search, LDAP, OAuth and much more. If you have advanced needs, Forgejo has you covered.

Selfhosters wanna host. But many people don't. (Ergo: lemmy.world, mastodon.world (GitHub anyone?) so maybe people would like forgejo.world And if not, I'll use it myself! :-)

Your mail address is only stored in the database, to which no-one but me has access, and it shows in your Settings page when logged in. So unless there's a security flaw in Lemmy, your mail address should be safe.

Really awesome work. We need more Lemmy servers!

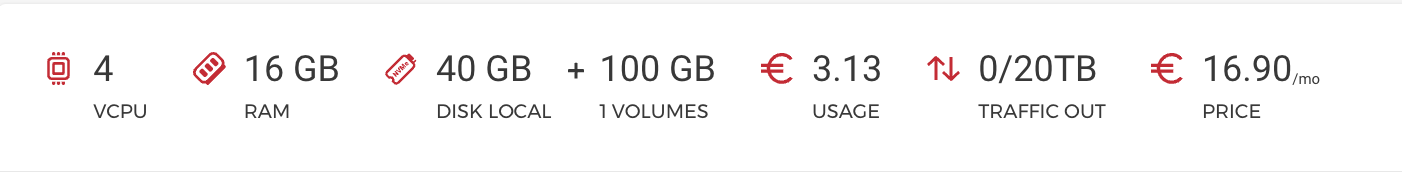

All on Hetzner.

I run lemmy.world on a VPS at Hetzner. They are cheap and good. Storage: I now (after 11 days) have 2GB of images and 2GB of database.

I did register writefreely.world planning to host that one day, but I need some more selfhosting nerds to help out running all these instances :-) The foundation is now already running a few dozen Fedi instances :-D