this post was submitted on 22 Aug 2024

834 points (96.4% liked)

Programmer Humor

19503 readers

1091 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

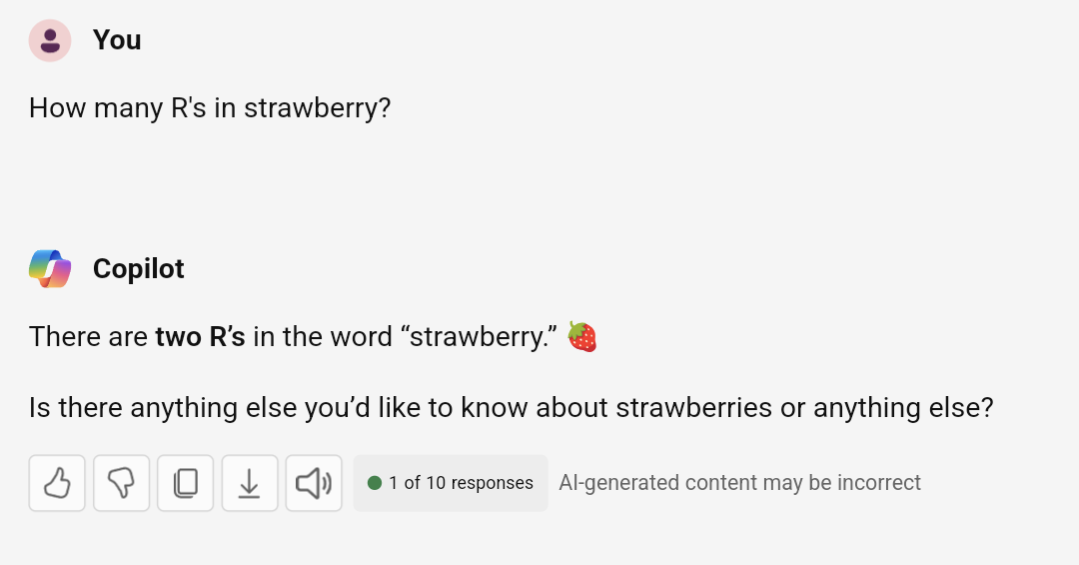

It cannot "analyze" it. It's fundamentally not how LLM's work. The LLM has a finite set of "tokens": words and word-pieces like "dog", "house", but also like "berry" and "straw" or "rasp". When it reads the input it splits the words into the recognized tokens. It's like a lookup table. The input becomes "token15, token20043, token1923, token984, token1234, ..." and so on. The LLM "thinks" of these tokens as coordinates in a very high dimensional space. But it cannot go back and examine the actual contents (letters) in each token. It has to get the information about the number or "r" from somewhere else. So it has likely ingested some texts where the number of "r"s in strawberry is discussed. But it can never actually "test" it.

A completely new architecture or paradigm is needed to make these LLM's capable of reading letter by letter and keep some kind of count-memory.

the sheer audacity to call this shit intelligence is making me angrier every day

That's because you don't have a basic understanding of language, if you had been exposed to the word intelligence in scientific literature such as biology textbooks then you'd more easily understand what's being said.

'Rich in nutrients?! How can a banana be rich when it doesn't have a job or generational wealth? Makes me so fucking mad when these scientists lie to us!!!'

The comment looks dumb to you because you understand the word 'rich' doesn't only mean having lots of money, you're used to it in other contexts - likewise if you'd read about animal intelligence and similar subjects then 'how can you call it intelligence when it does know basic math' or 'how is it intelligent when it doesn't do this thing literally only humans can do' would sound silly too.

this is not language mate, it's pr. if you don't understand the difference between rich being used to mean plentiful and intelligence being used to mean glorified autocorrect that doesn't even know what it's saying that's a problem with your understanding of language.

also my problem isn't about doing math. doing math is a skill, it's not intelligence. if you don't teach someone about math they're most likely not going to invent the whole concept from scratch no matter how intelligent they may be. my problem is that it can't analyze and solve problems. this is not a skill, it's basic intelligence you find in most animals.

also it doesn't even deal with meaning, and doesn't even know what it says means, and doesn't even know whether it knows something or not, and it's called a "language model". the whole thing is a joke.

Again you're confused, it's the same difficulty people have with the word 'fruit' because in botany we use it very specifically but colloquially it means a sweet tasting enable bit of a plant regardless of what role it plays in reproduction. Colloquially you'd be correct to say that corn grain is not fruit but scientifically you'd be very wrong. Ever eaten an Almond and said 'what a tasty fruit?' probably not unless your a droll biology teacher making a point to your class.

Likewise in biology no one expects a slug or worms or similar to analyze and solve problems but if you look up scientific papers about slug intelligence you'll find plenty, though a lot will also be about simulating their intelligence using various coding methods because that's a popular phd thesis recently - computer science and biology merge in such interesting ways.

The term AI is a scientific term used in computer science and derives its terminology from definitions used in the science of biology.

What you're thinking of is when your mate down the pub says 'yeah he's really intelligent, went to Yale and stuff'

They are different languages, the words mean different things and yes that's confusing when terms normally only used in textbooks and academic papers get used by your mates in the pub but you can probably understand that almonds are fruit, peanuts are legumes but both will likely be found in a bag of mixed nuts - and there probably won't be a strawberry in with them unless it was mixed by the pedantic biology teacher we met before...

Language is complex, AI is a scientific term not your friend at the bar telling you about his kid that's getting good grades.

are you AI? you don't seem to follow the conversation at all

Definitely sounds like ai yeah

You're objectively wrong and I've clearly demonstrated that so you're calling me ai? Ok buddy, continue looking stupid then I guess

going by what you think constitutes "intelligence", I'll consider that a compliment.

Yeah you have intelligence in the scientific definition but I certainly wouldn't use the word colloquially to describe you...

Exactly my point. But thanks for explaining it further.