$449 is still massively overpriced for a midrange card.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

Prices will never come down to previous gen card prices. We’ve passed the threshold. NVidia will keep their prices high because there is significant demand for their chips outside of gaming and AMD follows in lockstep.

This is probably true, but it doesn't mean that individual, non-AI/crypto consumers have to accept it, and largely, they haven't been. All it takes is for Nvidia/AMD stock to drop from the overinflated prices for prices to come down.

And the stock dip is unlikely since they're doing gangbusters on the datacenter front. Nvidia has enough gas in the tank to weather the hit from low consumer Gaming sales.

I'll be holding onto my 1060 6GB until it croaks.

I'm still rocking an R9 380 w 2GB VRAM. Upgrading just isn't feasable for me since the midrange cards are all super overpriced.

They'll come down when the AI bubble bursts. Never say never.

NVIDIA has been struggling in recent years to find use cases for their graphics cards. That's why they're pushing towards raytracing, because rasterization has hit its limit and people no longer need to upgrade their GPU for that (they tried pushing towards 8k resolution, but that's complete BS for screens outside of cinemas). However, most people don't care about having better reflections and indirect lighting in their games, so they're struggling to get anywhere in the gaming market. Now NVIDIA is moving into other markets for their cards that don't involve gamers, and they're just left as an afterthought.

I don't think that this will ever change again. Games like DOTA, Fortnite and Minecraft are hugely popular, and they don't need raytracing at all.

I personally tried going towards fluid simulations for games, because those also need a ton of GPU resources if calculated at runtime (that was the topic of my Master's thesis). However, there have barely been any games featuring dynamic water. It's apparently not interesting enough to design games around.

Hopefully the last generation cards will drop some after the official launch. Sucks seeing rx6700-6800xt all hovering around $600-$900 here in Canada.

Ooof. I just bought a 6700xt for £240 and thought that was a bit too pricey.

Where ? Just seeing the 6700 XT for £350 on Amazon.co.Uk But for my old i5 7600k , the £240 mark would be better :)

It was used on eBay.

Ah got you .. (slightly jealous & sipping beer sound .. 😆 )

Bought a C$500 6750xt on Newegg a while ago, it still comes up for that price a lot.

No 449 is great for a midrange card. It's okay to not like spending money but this price is impressive.

No it's not. If it was $350 I'd be impressed, but even in this day that's $100 overpriced.

Just remember you get Starfield for free so it's kind of like a $70 dollar savings. I personally wanted the card plus the game so it really works out as a win win. I think $430 for the 7800 XT sounds reasonable for, someo who hasn't upgraded since 2016 and currently has a rx480. It might be a midrange card but I honestly don't know what more I could ask for if it runs everything max at 1440 and most thinks 4k are 60fps or over.

As someone who dropped $550 on a 1080 (not Ti) years ago and still using it for VR, I could be tempted to go AMD. Nvidia has gone off the deep end with pricing and I can't see myself going that route. I'm starting to hit some bottleknecks and I'm sure I'll upgrade in the next 3 years.

I went from a 1070 to a 6700 XT myself for the same reason. nVidia can fuck off.

Too bad amd has always lagged in VR performance. Especially if you were trying to do wireless quest, I think the encoding latency was quite a bit higher.

I use an AMD GPU and I stream to my Quest 2 a lot. I've only had one app have issues ever. It was Google Earth VR which I understand that they quit developing. I have never noticed latency either..

I nabbed a 6800 XT for $550 last fall also for VR and the same one's even cheaper now (but the 7800 XT probably has it beat assuming the same or greater performance)

I’m in the same situation and at the current overpriced frame cost I’ll be waiting either for the next gen or the secondhand market once the next gen drops or just keeping the card another year.

Will reserve judgement till all the reviews are in but if it lives up to what AMD is saying could finally be a reason to build a new PC.

It’s certainly lived up to it being a huge waste of overpriced money

7800 XT looks like the best value at 16GB.

I know the Arc a770 has a 16GB variant for a lot cheaper, but it seems like its performance is generally way lower.

However, both vendors have released major driver updates recently so I might be judging by outdated benchmarks.

Since NVIDIA shit the bed this gen for everything under $599 4070, these seem like decent options at their respective price points

I'm excited to see the benchmarks

This is the best summary I could come up with:

“I just wish there were an option between the $300 to $400 marks that offered enough performance to push us firmly into the 1440p era.” That was my colleague Tom Warren’s conclusion reviewing the $399 Nvidia RTX 4060 Ti and $269 AMD Radeon RX 7600.

The company claims both cards can average over 60fps in the latest games at 1440p with maximum settings and no fancy upscaling tricks — including troubled PC ports like The Last of Us Part I and Star Wars Jedi: Survivor.

AMD says FSR 3 is already slated for Cyberpunk 2077, Forspoken, Immortals of Aveum, Avatar: Frontiers of Pandora, Warhammer 40,000: Space Marine 2, Frostpunk 2, Squad, Starship Troopers: Extermination, Black Myth: Wukong, Crimson Desert, and Like a Dragon: Infinite Wealth.

That way, you’ll be able to inject extra frames into any DX10 or DX11 game with your AMD graphics card, no developer support required.

“Our research tells us 70 percent of customers are willing to compromise on image quality,” says AMD gaming chief Frank Azor.

AMD will sell its RX 7800 XT reference design directly at AMD.com with the two-fan cooler you see in the render atop this post.

The original article contains 771 words, the summary contains 194 words. Saved 75%. I'm a bot and I'm open source!

Like a Dragon BE KILLING IT with their staying up to date on FSR!

AMD needs to hurry the fuck up and catch up in the AI game

I disagree honestly. AI is overly hyped.

I think engineers and programmers need to think far more carefully about how commodity 16GBs of VRAM at 500 GBps + 20TFlop parallel machines can improve their programs.

There's more to programming than just "Run Tensorflow 5% faster".

Upscaling games or interpolating frames looks really promising for improved fps.

Based on the demos of DLSS, I don't have a very high opinion of it. It seems to have the same "shining" problems that older methodologies had.

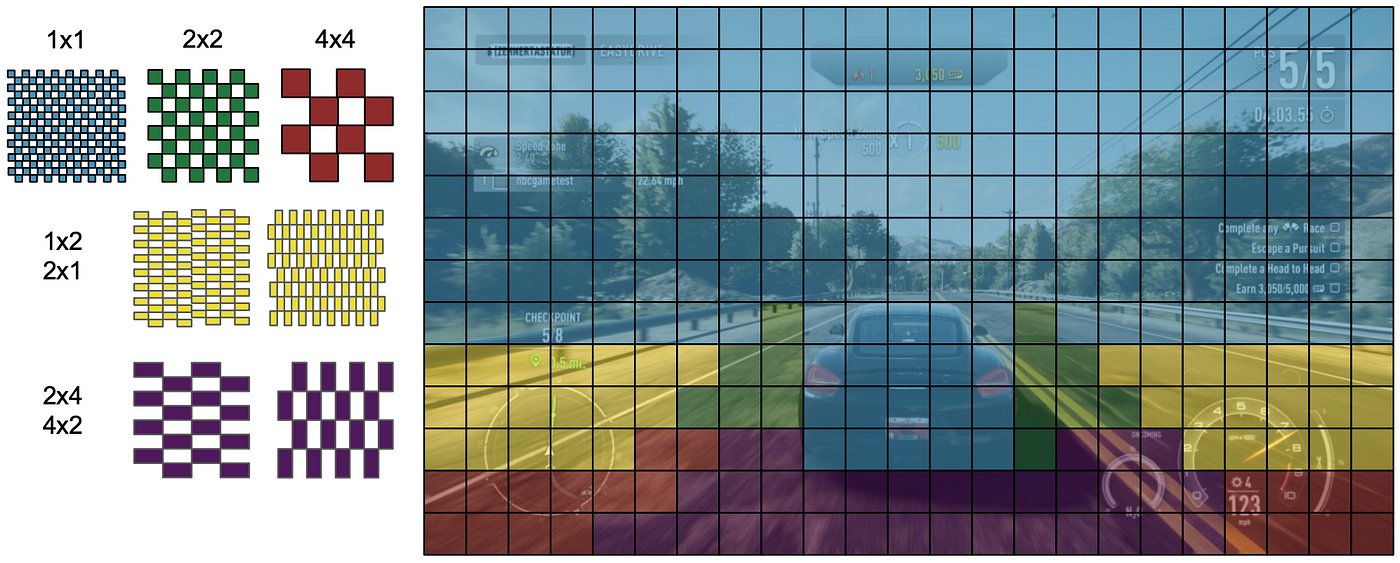

The promising high-quality stuff is VRS: those demos are incredible, though they require more manual work. Instead of rendering a 1080p "fake" 4k image and relying upon AI to upscale it... you render a 4k-image but tell the GPU which sections to render at 2x2 pixels instead.

Or even as low as 4x4 (aka: you may have a 3840 x 2160 pixel monitor, but the 4x4 region is rendered with roughly the same resources as 960 x 540 resolution). As it turns out, a huge number of regions in your video games have 4x4 or 2x2 appropriate regions, especially the ones that are obscured by fog, blur, and other effect worked on top.

Notice: the bottom of the screen of the above racing game will be obscured by motion-blur. So why render it at full 3840 x 2160 resolution? Its just a waste of compute-resources to render a high-quality image and then blur it away. Instead, its rendered at 4x4 (aka: equivalent to 960 x 540), and after the blur, they basically look the same. The rest of the screen can be rendered at 1x1 (aka: full 3840 x 2160). Furthermore, its very simple programming to figure out which areas are having such blurs, and even the direction of motion blur (ex: 2x1 vs 1x2 if you're doing horizontal blurs vs vertical blur passes after-the-fact).

Meanwhile, DLSS is "ignorant" about the layers of effects (fog, blur, etc. etc.) and difficult to work into the overall rendering pipeline. VRS already is implemented in more games than DLSS ever was and is the future.

You see, when a feature is actually used, it quietly gets implemented into every video game in DirectX12Ultimate or PS5 or XBox without anyone sweating. Everyone knows VRS is the future, no marketing needed. NVidia needed to push DLSS (unsuccessfully, IMO), because they're part of the AI hype train and trying to sell their AI cores.

Well, I never thought of the up scaling techs and AI in that way. That makes a lot of sense, but also depressing for the GPU market.

I too, don't really like how the demos of both DLSS and FRS look. There's always something off about them.

Ta to mu caro